The modern manufacturing landscape is increasingly powered by data-driven insights, where analytics, artificial intelligence, and connected devices transform how products are designed, produced, and delivered. As Industry 4.0 and the Industrial Internet of Things (IIoT) mature, data science has moved from a novelty to a necessity, driving sustainability, efficiency, and competitive advantage across the entire product lifecycle. This expansive shift opens abundant opportunities for data scientists, engineers, operations professionals, and business leaders to collaborate in creating smarter, more resilient manufacturing systems.

The Rise of Data Science in Manufacturing

Manufacturing has evolved from labor-intensive, mechanical processes to highly automated, sensor-rich environments that generate vast streams of data. This transition has given birth to a data-first approach in production, quality management, and supply chain orchestration. The integration of analytics with automation enables real-time decision-making, proactive maintenance, and continuous optimization, reshaping traditional workflows and performance benchmarks.

The current era is defined by the convergence of analytics, artificial intelligence, and automation with manufacturing operations. Industry 4.0 standards guide the deployment of interconnected systems that collect, process, and exchange data across machines, plants, and value chains. This interconnectedness supports advanced data-driven capabilities such as predictive analytics, computer vision for inspection, digital twins for product and process modeling, and autonomous control loops that adjust parameters on the fly. The result is a manufacturing ecosystem that not only reacts to changing conditions but anticipates them, enabling smoother operations, reduced waste, and improved product quality.

At the heart of this transformation lies the perception that every component of the production system—design choices, materials selection, equipment configuration, tooling, labor deployment, operating procedures, quality controls, packaging, and supply chain logistics—can be optimized through data-informed insights. A thorough examination and evaluation of these influencing elements becomes essential to manage all tasks as effectively as possible. Data science methods empower this scrutiny by turning raw data into actionable intelligence, supporting decisions that increase throughput, minimize losses, optimize resource use, and adapt to evolving market demands.

Today’s manufacturing data landscape is characterized by diverse data sources, including sensor streams from machines, energy usage metrics, quality and process-control data, supply chain records, maintenance logs, and product performance data post-release. The analytical workflows span data collection, preprocessing, exploratory analysis, model development, deployment, monitoring, and continuous improvement. Machine learning and deep learning techniques are increasingly deployed to detect patterns beyond human perception, forecast future states, classify defects, and optimize control strategies. The objective is clear: transform data into tangible improvements in efficiency, reliability, and customer value.

In this context, the manufacturing sector increasingly recognizes data science as a strategic capability rather than a purely technical specialty. With the right talent, governance, and infrastructure, data science unlocks new productivity frontiers. The opportunities span a broad spectrum, from tactical improvements in daily operations to strategic shifts in how products are conceived and brought to market. This expansive potential fuels a growing interest in data science roles across manufacturing domains, creating ample career paths for data scientists, data engineers, machine learning engineers, and operations researchers.

Industry 4.0, IIoT, and the Data-Driven Production Landscape

Industrial digitalization is redefining how factories operate. Industry 4.0 brings together cyber-physical systems, cloud-based analytics, and autonomous decision-making to create responsive, self-optimizing production environments. The IIoT underpins this transformation by connecting sensors, machines, devices, and control systems to collect granular, time-stamped data that feed analytics pipelines in real time or near real time.

This new landscape relies on a layered architecture that blends edge computing, on-premises data centers, and cloud platforms. Edge computing processes data close to the source to reduce latency for time-critical decisions, while cloud-based analytics provide scalable storage and deep modeling capabilities. Data governance and security frameworks are essential to manage the flow of information across the enterprise and to protect sensitive intellectual property and production data.

Digital twins play a central role in this milieu. A digital twin is a dynamic, data-driven representation of a physical asset or system that enables simulation, what-if analysis, and scenario planning. By mirroring real-world behavior, digital twins support design validation, process optimization, and predictive maintenance in a safe, code-free or code-friendly environment. In practice, a digital twin lets engineers test changes to a manufacturing process, assess the impact of new materials, and forecast how equipment will respond under different operating conditions before committing to changes in the live plant.

Data exchange within internal departments and across value chains becomes increasingly important as manufacturers seek to accelerate throughput and optimize end-to-end performance. Interoperability standards, common data models, and secure data-sharing practices enable smoother collaboration between design, production, procurement, logistics, and customer-facing functions. The ultimate aim is to synchronize activities across the entire value chain, ensuring that capacity planning, inventory decisions, and shipping schedules align with demand signals and production constraints.

In concert with these advances, manufacturers are leveraging augmented reality (AR) and mixed reality (MR) tools to augment human capabilities on the shop floor. Technicians can visualize real-time sensor data, assembly instructions, or maintenance guidance overlaid onto the physical equipment, making complex tasks more approachable and reducing the risk of human error. This cognitive augmentation complements automated systems, enabling faster problem solving and more reliable maintenance workflows.

The data-driven production landscape also emphasizes resilience. Advanced analytics enable scenario planning for demand shocks, supply disruptions, or equipment outages, supporting contingency strategies and quicker recovery. By combining predictive insights with robust optimization, manufacturers can maintain service levels, minimize downtime, and protect margins even in the face of uncertainty.

Demand Forecasting and Inventory Management

In manufacturing, inventory management and demand forecasting directly influence financial performance. Inventory carries costs, including capital tied up, warehousing, obsolescence, and risk of spoilage or damage. In a competitive environment, Just-in-Time (JIT) practices are essential to maintain lean inventories while ensuring timely availability to meet customer demand. The balance is delicate: holding too much inventory ties up capital and incurs carrying costs, while too little inventory risks stockouts and lost sales.

Data science provides a rigorous, data-backed foundation for inventory planning and demand forecasting. Traditional methods, such as ABC analysis and conventional safety stock calculations, can struggle to keep pace with the complexity and velocity of modern supply chains. With statistical modeling, machine learning, and probabilistic forecasting, organizations can generate precise, data-driven estimates of future demand and optimal inventory levels.

Forecasting systems in manufacturing increasingly rely on time-series models, causal models, and hybrid approaches. Time-series models analyze historical demand patterns to extrapolate future behavior, incorporating seasonality, trends, and external factors like promotions, competitive actions, and macroeconomic indicators. Causal models attempt to attribute demand drivers directly, enabling what-if analysis and impact assessment for factors such as pricing changes, marketing interventions, and supply constraints. Hybrid models blend the strengths of both approaches to improve accuracy and adaptability.

The Just-in-Time paradigm benefits from predictive analytics that inform production scheduling and replenishment timing. By understanding when and how much to order or produce, manufacturing lines can maintain optimal workload, reduce changeover costs, and minimize storage requirements. AI-driven optimization can also help determine optimal lot sizes, reorder points, and supplier selection strategies to sustain lean operations without compromising customer service levels.

From a data science perspective, inventory management analytics involve a combination of demand forecasting, lead-time analysis, supplier performance evaluation, and constraint-based optimization. Machine learning models can forecast demand with confidence intervals, enabling risk-aware planning. Inventory policies can be refined using optimization algorithms that consider service level targets, demand variance, supplier reliability, lead times, and capacity constraints. The ultimate objective is to align inventory levels with real-time demand signals and the dynamic realities of the production environment.

The role of data science extends beyond numerical forecasts. Prescriptive analytics can propose concrete actions, such as adjusting production plans, reallocating buffers, or rerouting shipments to mitigate risk. Visualization dashboards and alerting mechanisms translate complex analytics into actionable guidance for operations managers, planners, and executives. By embedding data-driven decision-making into daily routines, manufacturers can achieve improved service levels, reduced carrying costs, and more predictable financial outcomes.

In practice, successful demand forecasting and inventory management rely on robust data governance, high-quality data, and cross-functional collaboration. Data quality issues, including missing values, sensor drift, and inconsistent labeling, can undermine model accuracy. Building reliable data pipelines, establishing standard definitions, and maintaining data lineage are crucial steps. Equally important is aligning analytics work with business goals, ensuring that forecasts inform concrete planning decisions and that stakeholders understand and trust the outputs. Through disciplined data management and advanced analytics, manufacturers can achieve a more responsive, cost-efficient, and resilient supply chain.

Computer Vision in Manufacturing

Advances in computer vision, driven by deep learning and convolutional neural networks, have dramatically improved the ability to analyze visual data in manufacturing settings. Image analysis supports object detection, classification, segmentation, and inspection tasks that were previously labor-intensive or error-prone. The practical applications span quality assurance, process monitoring, and automation, enabling faster decision-making and consistent product quality.

In production environments, computer vision systems can automatically identify defects such as scratches, dents, misalignments, surface anomalies, and dimensional non-conformities. Real-time inspection enables immediate corrective action, reducing the volume of defective parts that progress through the line and minimizing rework downstream. Automated defect detection also lowers the reliance on manual inspection, freeing skilled operators to focus on tasks that require judgment and expertise.

The deployment of computer vision solutions often involves training deep learning models on labeled image data. This labeling process, also known as annotation, creates supervised datasets that enable models to learn to recognize patterns and categorize instances accurately. As models are deployed on the shop floor, edge devices process imagery with low latency, ensuring that quality checks occur within production cycles and do not introduce bottlenecks.

Beyond defect detection, computer vision supports broader analysis such as dimensional measurement, pose estimation, and assembly verification. For example, vision systems can measure component dimensions to ensure conformance with specifications, determine assembly correctness, and track product positioning for autonomous guided vehicles (AGVs) or robotic arms. The combination of high-precision vision and robotics accelerates production throughput while maintaining strict quality standards.

The business value of computer vision in manufacturing lies in accuracy, speed, and consistency. Vision-enabled automation reduces the rate of human error, lowers labor costs, and enhances process transparency. It also enables traceability by capturing audit trails of inspection results, which is increasingly important for regulatory compliance and product stewardship. Furthermore, vision systems can adapt to diverse product variants and evolving quality criteria, supporting mass customization and flexible manufacturing strategies.

Implementing computer vision requires careful consideration of data quality, labeling, and model maintenance. Data labeling must be thorough and representative to avoid bias and to ensure robust performance across lighting conditions, backgrounds, and product variants. Models must be continually retrained as new defects or process changes arise, and there must be robust monitoring to detect model drift. Integration with existing manufacturing execution systems (MES) and enterprise resource planning (ERP) platforms ensures that vision-derived insights translate into concrete actions, such as triggering alarms, initiating corrective tasks, or adjusting automated inspection thresholds.

The path to scalable computer vision in manufacturing also involves operational considerations: ensuring latency remains within acceptable bounds, securing data from unauthorized access, and managing the computational footprint of edge devices. As these systems mature, they will increasingly collaborate with other AI-enabled processes, such as predictive quality analytics and automated root-cause analysis, creating a holistic, data-driven quality ecosystem.

Design and Development of Products

Product design and development historically relied on iterative experimentation, designer experience, and prototype testing. This approach, while valuable, often entailed high costs, long lead times, and substantial risk of failure before market validation. In the modern manufacturing era, design and development are increasingly informed by precise, data-driven methods that accelerate innovation and improve product-market fit.

Advanced design software, computer-aided design (CAD), and simulation environments enable rapid prototyping and rigorous evaluation of design concepts. Engineers can explore a wider range of design options, test performance under diverse operating conditions, and identify potential issues early in the development cycle. This capability reduces the dependence on slow, costly physical prototyping and enables faster iteration cycles.

Simulation tools, such as MATLAB and other numerical analysis platforms, allow engineers to model complex physical phenomena, assess the impact of material choices, and optimize functional attributes before committing to fabrication. In many cases, digital twins extend from the product level to encompass the production process itself, enabling virtual testing of manufacturing workflows, assembly sequences, and tooling requirements. This integrated approach supports concurrent engineering, where product and process design evolve in harmony.

Generative design and optimization are increasingly incorporated into product development workflows. These techniques leverage algorithms to automatically generate design options that meet specified performance criteria while minimizing weight, cost, or material usage. Engineers can then evaluate the most promising candidates, refine parameters, and select configurations that best balance competing objectives. By embracing data-driven design principles, manufacturers can reduce development time, lower development costs, and deliver products that better align with customer needs and market trends.

A robust product development process benefits from data science by integrating market research, customer feedback, and performance data from early prototypes. Sentiment analysis, demand signals, and usage data can guide feature prioritization and roadmap decisions. Data-driven design ensures that product capabilities align with real-world requirements, enabling a smoother handoff from design to production and a more predictable path to scale.

Collaboration between design teams, manufacturing engineers, and data scientists is essential to realize these advantages. Data literacy across disciplines enables more effective interpretation of model outputs and more informed decision-making. The end goal is to reduce time-to-market while improving product reliability, manufacturability, and customer satisfaction. When design and production are harmonized through data science, organizations gain a sustainable competitive edge in a rapidly evolving market.

Optimizing the Supply Chain

A precise and timely delivery of manufactured goods hinges on an optimized supply chain. The supply chain must be structured to match production capacity, lead times, and customer demand with accuracy. Inventory planning, supplier management, and logistics execution are key components that determine a company’s ability to satisfy orders while maintaining lean operations. Data science plays a pivotal role in turning supply chain data into actionable strategies.

To optimize supply chains, manufacturers analyze large volumes of data across suppliers, logistics providers, manufacturing sites, and distribution centers. This includes order histories, shipment tracking, lead-time variability, and supplier performance metrics. Data-driven insights inform decisions about where to source materials, how to schedule production runs, and how to allocate inventory across locations to minimize risk and maximize service levels.

Tracking technologies, such as RFID and barcode scanning, enable end-to-end visibility of raw materials and finished goods within warehouses and across the transportation network. This visibility supports accurate inventory accounting, real-time location tracking, and improved coordination between suppliers and manufacturers. When paired with predictive analytics, these capabilities allow proactive management of stock levels, identifying potential bottlenecks before they occur and enabling preemptive actions to maintain continuity.

Beyond visibility, optimization models help align procurement, production, and logistics with demand signals. For example, routing and scheduling algorithms can determine the most cost-effective transportation plans, while inventory replenishment strategies can balance service levels against carrying costs. Such optimization considers constraints such as production capacity, material availability, transportation capacity, and regulatory requirements, resulting in more reliable delivery performance and reduced total cost of ownership.

In practice, supply chain optimization benefits from cross-functional collaboration among procurement, planning, manufacturing, and logistics teams. Data scientists play a crucial role in translating raw data into decision-ready insights, providing scenario analyses, risk assessments, and recommended actions. This collaborative approach ensures that supply chain decisions are informed by both data and domain expertise, enabling resilience in the face of demand volatility, supplier disruptions, or macroeconomic shifts.

The future of supply chain optimization is increasingly aligned with real-time data and adaptive planning. By integrating live data streams from sensors, external market indicators, and supplier dashboards, manufacturers can shorten planning cycles, respond rapidly to changing conditions, and maintain high service levels while controlling costs. This data-driven evolution supports end-to-end value chain optimization, delivering improved efficiency, profitability, and customer satisfaction.

Forecasting Faults and Preventive Maintenance

Proactive maintenance is a cornerstone of reliable manufacturing operations. Modern plants deploy an array of sensors that monitor machine health, capturing data such as temperature, vibration, pressure, and humidity. Analyzing these signals helps detect anomalies, forecast potential failures, and schedule maintenance before unplanned downtime disrupts production. The result is improved asset availability, reduced repair costs, and longer equipment life.

Sensors collect continuous streams of data that reflect equipment state and process conditions. When values deviate from established ranges, maintenance teams can investigate suspected issues and implement corrective actions. The challenge lies in distinguishing benign fluctuations from meaningful precursors to failure. Data science techniques, including anomaly detection, time-series forecasting, and condition-based maintenance models, provide robust tools to interpret these signals.

Predictive maintenance uses historical failure data, sensor readings, and contextual information to forecast the remaining useful life (RUL) of components and to estimate the timing of potential faults. This enables maintenance planning that minimizes downtime and extends asset life. Implementing such programs requires a combination of statistical modeling, machine learning, and domain knowledge about equipment and processes.

Root-cause analysis is another critical component of preventive maintenance. When faults are detected, data-driven methods help identify underlying drivers—such as a deteriorating component, an operational change, or environmental factors—that contribute to failures. Understanding the root cause supports targeted interventions and more durable equipment reliability improvements over time.

A successful faults and maintenance program depends on data quality, sensor reliability, and robust data pipelines. It requires systematic data collection, labeling of events, and consistent maintenance records. It also depends on integrating maintenance recommendations with maintenance management systems, work orders, and inventory planning so that recommended actions are timely and actionable. When done well, predictive maintenance reduces unexpected downtime, lowers maintenance costs, and improves overall equipment effectiveness.

Embracing data-driven maintenance also supports continuous improvement. By analyzing failure patterns and maintenance outcomes, organizations can refine equipment designs, update preventive maintenance schedules, and adjust operating procedures to mitigate recurring issues. The result is a more resilient production environment that can absorb stressors, adapt to changing conditions, and sustain high performance.

Data Science Challenges in Manufacturing

Despite the promise of data science in manufacturing, the journey is not without obstacles. Organizations seeking to harness analytics must navigate a landscape of technical and organizational challenges that can impede progress if not addressed thoughtfully.

-

Lack of Technical Personnel

Although data science has become a widely discussed concept, there remains a shortage of skilled and experienced professionals who can bridge analytics with manufacturing domain knowledge. This talent gap can hinder the ability to design, implement, and sustain data-driven solutions. Turnover and the cost of ongoing training add financial pressure, especially for manufacturers with complex processes and specialized equipment. Bringing in the right mix of data scientists, data engineers, machine learning engineers, and industrial engineers is essential to realize the full potential of data-driven manufacturing. -

Managing Large Amounts of Data

Today’s manufacturers generate enormous volumes of data from sensors, machines, quality records, ERP systems, and supplier networks. While collecting data is increasingly easy, the real challenge lies in extracting value from raw data. Cleaning, transforming, and harmonizing disparate data sources require robust data pipelines and governance. Deciding whether to process data on the cloud or on premises also matters for latency, security, and cost. Data storage needs, data latency, and the complexity of integrating diverse data schemas can complicate the analytics journey. -

Coordination Among Management and Stakeholders

Implementing data science in manufacturing requires alignment across multiple departments, including production, planning, marketing, and the data science team itself. A deep understanding of business intelligence, data science, and manufacturing technology is necessary to extract maximum benefit. Gaining buy-in from senior leadership and encouraging cross-functional collaboration can be challenging. Resistance to change, misaligned incentives, and differing priorities can slow progress. Overcoming these barriers involves clear value articulation, demonstrable quick wins, and structured governance that coordinates data initiatives with enterprise strategy. -

Data Quality and Governance

The effectiveness of data-driven decisions hinges on data quality. Inconsistent sensor calibrations, missing data, mislabeling, and drift in measurement systems can degrade model performance. Establishing data governance, data lineage, and standardized data definitions is critical. Without governance, models may be trained on biased or outdated data, leading to unreliable predictions. Ongoing data quality monitoring, validation procedures, and data stewardship roles help sustain trustworthy analytics over time. -

Integration with Legacy Systems

Many manufacturing plants operate with a mix of legacy control systems, MES, ERP, and newer analytics platforms. Integrating these environments can be technically complex and costly. Interoperability challenges may require middleware, adapters, or custom interfaces, which can slow deployment and increase risk. A phased approach to integration—starting with non-critical pilot projects and gradually expanding—can help manage risk while building organizational confidence in the value of analytics. -

Security and Compliance

The digitization of manufacturing assets broadens the attack surface for cyber threats. Protecting sensitive production data, intellectual property, and process control systems is essential. Security considerations range from access controls and encryption to monitoring, incident response, and regulatory compliance. A security-by-design mindset, ongoing risk assessments, and collaboration between IT, OT (operational technology), and data teams are crucial to maintaining a secure analytics-enabled manufacturing environment. -

Change Management and Adoption

Even with robust models and compelling ROI, getting buy-in from operators, managers, and engineers can be tough. Change management involves training, process redesign, and creating a culture that embraces experimentation and evidence-based decision-making. Effective communication, stakeholder engagement, and visible leadership support help ensure adoption and sustained impact. -

Scalability and Maintenance of Models

Deploying models at scale across multiple lines or sites introduces maintenance challenges. Models require monitoring for drift, retraining with fresh data, and version control. Operationalizing AI—moving from prototype to production—demands robust infrastructure, reliable data pipelines, and clear ownership. Establishing a scalable MLOps (machine learning operations) framework supports continuous integration, deployment, and monitoring of analytics solutions. -

Economic and Resource Constraints

Capital expenditure, operating budgets, and competing investment priorities influence the pace of data science initiatives. Organizations must balance the potential long-term gains from analytics with the upfront costs of data infrastructure, software licenses, and specialized talent. Prioritization, staged investments, and clear business cases help allocate resources efficiently. -

Ethical and Environmental Considerations

As data science becomes more pervasive, ethical questions surrounding data privacy, bias in models, and the environmental impact of large-scale computing come to the fore. Manufacturers must consider responsible AI practices, fairness in decision-making, and sustainable computing approaches to minimize energy consumption while maximizing analytical value.

Addressing these challenges requires a holistic approach that combines people, process, and technology. This includes recruiting and developing talent, investing in data infrastructure, implementing governance frameworks, fostering cross-functional collaboration, and embedding analytics into core business processes. When these elements are aligned, data science initiatives deliver meaningful returns and contribute to a more resilient, efficient, and innovative manufacturing ecosystem.

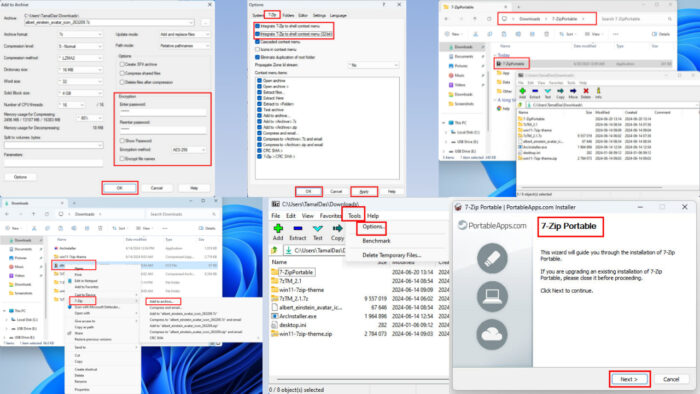

Tools Used by Data Scientists in Manufacturing

The tools used in data science for manufacturing mirror those employed across many data-driven industries, adapted to the specifics of industrial settings. Data scientists engage in data extraction, transformation, and loading (ETL), feature engineering, model training, validation, and deployment. They rely on a mix of programming languages, statistical tools, visualization libraries, and workflow platforms to build, test, and operationalize predictive and prescriptive analytics.

Key tools commonly used include:

-

TensorFlow: A leading open-source framework for machine learning and deep learning. Its high performance on CPUs, GPUs, and now specialized accelerators supports the training and deployment of complex neural networks used in defect detection, predictive maintenance, and autonomous control tasks.

-

Power BI: A business intelligence platform widely used to create dashboards and KPI visualizations for industrial operations. By preprocessing data with structured queries and DAX calculations, engineers and managers can monitor production performance, quality metrics, and energy use in an accessible visual format.

-

Matplotlib: A foundational Python plotting library that enables the creation of a wide range of visualizations. It supports quick exploration and communication of data-driven insights, including charts, histograms, and scatter plots that illuminate process relationships.

-

ggplot2: A powerful data visualization package in the R ecosystem. It enables sophisticated, publication-quality graphics that help analysts convey complex relationships among production variables, quality indicators, and supply chain metrics.

-

Jupyter Notebooks: An open-source environment that supports interactive data analysis, experimentation, and documentation. Jupyter is widely used for exploratory data analysis, model development, and reproducible research, with support for Python, R, and other languages.

These core tools form the backbone of data science work in manufacturing, enabling analysts to build models, visualize results, and communicate insights effectively. Beyond these, many practitioners incorporate additional technologies to address domain-specific needs:

-

PyTorch and scikit-learn: For versatile machine learning and deep learning tasks, enabling rapid prototyping, experimentation, and deployment of classification, regression, and time-series models adaptable to manufacturing data.

-

SQL and NoSQL databases: For storing and querying large-scale manufacturing data, including time-series databases, document stores, and relational systems that support analytics workflows.

-

Apache Hadoop and Apache Spark: For processing and analyzing large datasets that exceed single-machine capacity. Spark, in particular, supports scalable machine learning workflows on big data.

-

Tableau, and other BI tools: For advanced data visualization and self-service analytics, enabling stakeholders to explore data, run what-if analyses, and monitor performance across plants and lines.

-

Time-series and forecasting libraries: Tools such as Prophet, statsmodels, and dedicated time-series platforms help forecast demand, capacity, and maintenance needs with robust confidence intervals.

-

Data engineering and MLOps platforms: Solutions that streamline data pipelines, model versioning, deployment, monitoring, and governance across multiple production sites.

The choice of tools typically depends on the problem domain, data availability, organizational capabilities, and the scale of deployment. A well-rounded data science stack in manufacturing blends statistical rigor with scalable computing, robust data governance, and clear integration with industrial control systems and business processes.

The Future of Data Science in Manufacturing

Automation and simulation are already delivering precise production outcomes in many sectors, and the next decade is expected to bring further transformation driven by data science and advanced manufacturing technologies. Continued growth will be fueled by the ever-increasing volume of data generated by IoT devices, sensors, connected machinery, and digital twins, creating richer opportunities for analytics and optimization.

The Industrial Internet of Things (IIoT) will continue to be a central driver of data exchange within manufacturing organizations and across value chains. Real-time data sharing across departments and with partner networks enables more coordinated decision-making, faster response to anomalies, and improved traceability. Organizations will increasingly rely on standardized data models, interoperable interfaces, and secure data-sharing practices to unlock cross-functional insights and align operations with strategic goals.

Augmented reality (AR) and mixed reality (MR) technologies will complement automated systems by providing technicians with immersive, data-rich guidance on the shop floor. With AR overlays, engineers can access real-time sensor data, maintenance histories, and procedural checklists within their field of view, enabling more efficient troubleshooting and more accurate maintenance tasks. This cognitive augmentation integrates human expertise with automated intelligence to accelerate problem solving and enhance quality.

The continued maturation of digital twins will deepen the synergy between product design and manufacturing. Dynamic, data-informed twins will model not only component behavior but also assembly processes, supply chain interactions, and energy consumption. Simulations will enable rapid design refinement, process optimization, and risk assessment across product lifecycles. This holistic perspective supports more accurate forecasting, better decision-making, and faster time-to-market with lower risk.

Sustainable manufacturing will be increasingly enabled by data science. Analytics will optimize energy consumption, reduce emissions, and minimize waste throughout the production system. Life cycle assessment (LCA) data, material optimization, and recycling and remanufacturing strategies will be integrated with production analytics to support circular economy initiatives and responsible resource use.

Security and privacy will continue to be central to the future of data-driven manufacturing. As data flows expand across plants and supply networks, robust cybersecurity measures, privacy-preserving analytics, and compliance with evolving regulations will be essential. The balance between openness for collaboration and protection of sensitive information will shape governance frameworks and the design of analytical ecosystems.

The workforce of the future will be shaped by ongoing upskilling and new collaboration models. Data fluency across the organization, cross-disciplinary teams, and continuous learning will become expectations rather than exceptions. As manufacturing processes become more autonomous, human talent will focus on higher-value tasks, including strategy, design, and complex problem solving that leverage data-driven insights.

In summary, the future of data science in manufacturing is a convergence of intelligent automation, immersive decision support, and sustainable production practices. The ongoing interplay between data, machines, and humans will yield a manufacturing landscape characterized by heightened efficiency, resilience, and innovation. Organizations that invest in the right data infrastructure, governance, talent, and cross-functional collaboration will be well positioned to capture the full spectrum of benefits that data science offers for modern manufacturing.

Conclusion

Data science is redefining the core of manufacturing, from design and development to production, quality assurance, and supply chain optimization. Industry 4.0 and IIoT lay the groundwork for a connected, intelligent production environment where data-driven decision-making enhances efficiency, reduces waste, and improves product quality. The most impactful applications—demand forecasting and inventory management, computer vision for inspection, design and development improvements, supply chain optimization, and proactive maintenance—demonstrate the breadth of analytics’ reach across the manufacturing value chain.

While promising, the path forward is not without challenges. The industry must address talent gaps, manage vast and diverse data, navigate governance concerns, integrate with legacy systems, ensure security and compliance, and cultivate organizational readiness for data-driven change. Selecting the right tools, establishing scalable data pipelines, and embedding analytics into everyday workflows are essential steps toward realizing durable benefits.

As manufacturers continue to invest in digital capabilities, the future of data science in manufacturing will be defined by more advanced analytics, real-time intelligence, and deeply integrated simulations. Digital twins, AR-assisted workflows, and prescriptive optimization will become commonplace, enabling smarter decisions at every stage of the product lifecycle. Those who embrace data-driven transformation with clear governance, cross-functional collaboration, and a focus on value creation will set new benchmarks for performance, competitiveness, and sustainability in modern manufacturing.